In my last article I described how I used ElasticSearch, Fluentd and Kibana (EFK). Besides log aggregation (getting log information available at a centralized location), I also described how I created some visualizations within a dashboard.

[https://technology.amis.nl/2019/05/06/using-elasticsearch-fluentd-and-kibana-for-log-aggregation/]

In a new series of articles, I will dive into using Filebeat and Logstash (from the Elastic Stack) to do the same.

In this article I will talk about the installation and use of Filebeat (without Logstash).

EFK

One popular centralized logging solution is the Elasticsearch, Fluentd, and Kibana (EFK) stack.

Fluentd

Fluentd is an open source data collector, which lets you unify the data collection and consumption for a better use and understanding of data.

[https://www.fluentd.org/]

ELK Stack

“ELK” is the acronym for three open source projects: Elasticsearch, Logstash, and Kibana.

[https://www.elastic.co/what-is/elk-stack]

In my previous article I already spoke about Elasticsearch (a search and analytics engine) <Store, Search, and Analyze> and Kibana (which lets users visualize data with charts and graphs in Elasticsearch) <Explore, Visualize, and Share>.

Elastic Stack

The Elastic Stack is the next evolution of the ELK Stack.

[https://www.elastic.co/what-is/elk-stack]

Logstash

Logstash <Collect, Enrich, and Transport> is a server-side data processing pipeline that ingests data from multiple sources simultaneously, transforms it, and then sends it to a “stash” like Elasticsearch.

[https://www.elastic.co/what-is/elk-stack]

Beats

In 2015, a family of lightweight, single-purpose data shippers were introduced into the ELK Stack equation. They are called Beats <Collect, Parse, and Ship>.

[https://www.elastic.co/what-is/elk-stack]

Filebeat

Filebeat is a lightweight shipper for forwarding and centralizing log data. Installed as an agent on your servers, Filebeat monitors the log files or locations that you specify, collects log events, and forwards them to either to Elasticsearch or Logstash for indexing.

[https://www.elastic.co/guide/en/beats/filebeat/current/filebeat-overview.html]

This time I won’t be using Fluentd for log aggregation. I leave it up to you to decide which product is most suitable for (log) data collection in your situation.

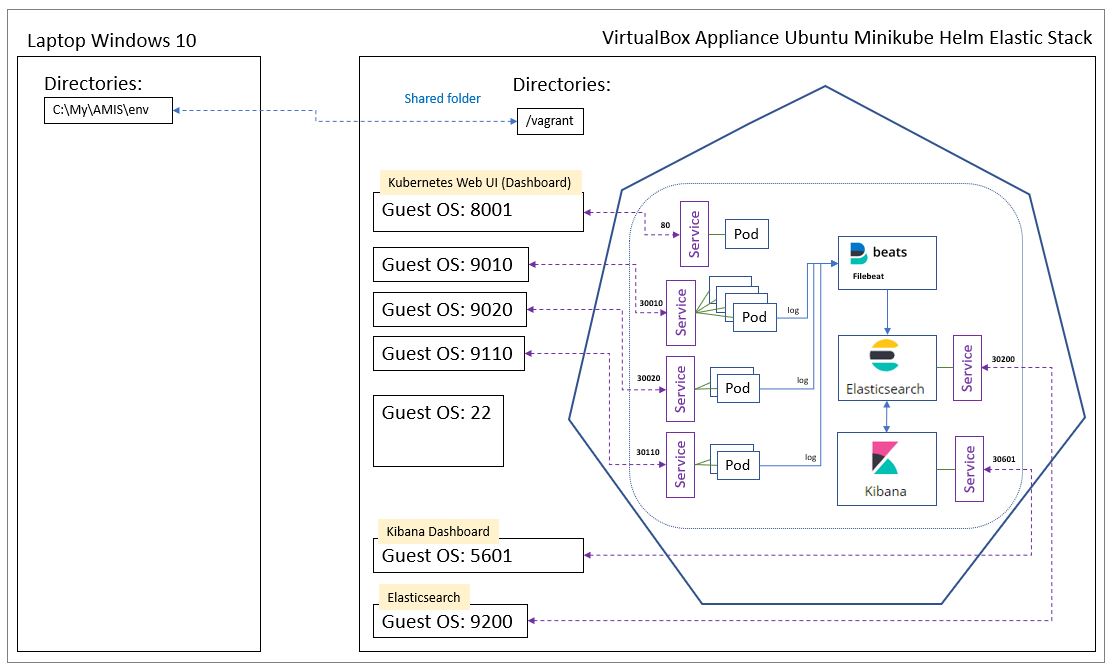

In a previous series of articles, I talked about an environment, I prepared on my Windows laptop, with a guest Operating System, Docker and Minikube available within an Oracle VirtualBox appliance , with the help of Vagrant. And now also I will be using that environment.

Log aggregation

In a containerized environment like Kubernetes, Pods and the containers within them, can be created and deleted automatically via ReplicaSet’s. So, it’s not always easy to now where in your environment, you can find the log file that you need, to analyze a problem that occurred in a particular application. Via log aggregation, the log information becomes available at a centralized location.

In the table below, you can see an overview of the booksservice Pods that are present in the demo environment, including the labels that are used:

| Spring Boot application | Service endpoint | Pod | Namespace | Label key | |||

| Environment | Database | app | version | environment | |||

| DEV | H2 in memory | http://localhost:9010/books | booksservice-v1.0-* | nl-amis-development | booksservice | 1.0 | development |

| http://localhost:9020/books | booksservice-v2.0-* | nl-amis-development | booksservice | 2.0 | development | ||

| TST | MySQL | http://localhost:9110/books | booksservice-v1.0-* | nl-amis-testing | booksservice | 1.0 | testing |

Labels are key/value pairs that are attached to objects, such as pods. Labels are intended to be used to specify identifying attributes of objects that are meaningful and relevant to users, but do not directly imply semantics to the core system. Labels can be used to organize and to select subsets of objects. Labels can be attached to objects at creation time and subsequently added and modified at any time. Each object can have a set of key/value labels defined. Each Key must be unique for a given object.

[https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/]

Elastic Stack installation order

Install the Elastic Stack products you want to use in the following order:

- Elasticsearch (install instructions)

- Kibana (install)

- Logstash (install)

- Beats (install instructions)

[https://www.elastic.co/guide/en/elastic-stack/current/installing-elastic-stack.html]

When installing Filebeat, installing Logstash (for parsing and enhancing the data) is optional.

I wanted to start simple, so I started with the installation of Filebeat (without Logstash).

Installing Filebeat

You can use Filebeat Docker images on Kubernetes to retrieve and ship the container logs.

You deploy Filebeat as a DaemonSet to ensure there’s a running instance on each node of the cluster.

The Docker logs host folder (/var/lib/docker/containers) is mounted on the Filebeat container. Filebeat starts an input for the files and begins harvesting them as soon as they appear in the folder.

I found an example Kubernetes manifest file in order to setup Filebeat, in the Elastic Filebeat documentation:

curl -L -O https://raw.githubusercontent.com/elastic/beats/7.3/deploy/kubernetes/filebeat-kubernetes.yaml

[https://www.elastic.co/guide/en/beats/filebeat/7.3/running-on-kubernetes.html]

In line with how a previously set up my environment , from this example manifest file, I created the following manifest files (with my own namespace and labels):

- configmap-filebeat.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-configmap

namespace: nl-amis-logging

labels:

app: filebeat

version: "1.0"

environment: logging

data:

filebeat.yml: |-

filebeat.inputs:

- type: container

paths:

- /var/log/containers/*.log

processors:

- add_kubernetes_metadata:

in_cluster: true

host: ${NODE_NAME}

matchers:

- logs_path:

logs_path: "/var/log/containers/"

# To enable hints based autodiscover, remove `filebeat.inputs` configuration and uncomment this:

#filebeat.autodiscover:

# providers:

# - type: kubernetes

# host: ${NODE_NAME}

# hints.enabled: true

# hints.default_config:

# type: container

# paths:

# - /var/log/containers/*${data.kubernetes.container.id}.log

processors:

- add_cloud_metadata:

- add_host_metadata:

cloud.id: ${ELASTIC_CLOUD_ID}

cloud.auth: ${ELASTIC_CLOUD_AUTH}

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

username: ${ELASTICSEARCH_USERNAME}

password: ${ELASTICSEARCH_PASSWORD}

Remark about using a ConfigMap:

By using a ConfigMap, you can provide configuration data to an application without storing it in the container image or hardcoding it into the pod specification.

[https://kubernetes.io/docs/tasks/configure-pod-container/configure-pod-configmap/]

The data field (with key-value pairs) contains the configuration data. In our case the ConfigMap holds information in the form of the content of a configuration file (filebeat.yml).

Later on, I will change this and create the ConfigMap from a file, as I also did when I used Fluentd (see previous article).

[https://technology.amis.nl/2019/04/23/using-vagrant-and-shell-scripts-to-further-automate-setting-up-my-demo-environment-from-scratch-including-elasticsearch-fluentd-and-kibana-efk-within-minikube/]

- daemonset-filebeat.yaml

apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: filebeat-daemonset namespace: nl-amis-logging labels: app: filebeat version: "1.0" environment: logging spec: template: metadata: labels: app: filebeat version: "1.0" environment: logging spec: serviceAccountName: filebeat-serviceaccount terminationGracePeriodSeconds: 30 hostNetwork: true dnsPolicy: ClusterFirstWithHostNet containers: - name: filebeat image: docker.elastic.co/beats/filebeat:7.3.1 args: [ "-c", "/etc/filebeat.yml", "-e", ] env: - name: ELASTICSEARCH_HOST value: elasticsearch - name: ELASTICSEARCH_PORT value: "9200" - name: ELASTICSEARCH_USERNAME value: elastic - name: ELASTICSEARCH_PASSWORD value: changeme - name: ELASTIC_CLOUD_ID value: - name: ELASTIC_CLOUD_AUTH value: - name: NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName securityContext: runAsUser: 0 # If using Red Hat OpenShift uncomment this: #privileged: true resources: limits: memory: 200Mi requests: cpu: 100m memory: 100Mi volumeMounts: - name: config mountPath: /etc/filebeat.yml readOnly: true subPath: filebeat.yml - name: data mountPath: /usr/share/filebeat/data - name: varlibdockercontainers mountPath: /var/lib/docker/containers readOnly: true - name: varlog mountPath: /var/log readOnly: true volumes: - name: config configMap: defaultMode: 0600 name: filebeat-configmap - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers - name: varlog hostPath: path: /var/log # data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart - name: data hostPath: path: /var/lib/filebeat-data type: DirectoryOrCreate

- clusterrolebinding-filebeat.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: filebeat-clusterrolebinding namespace: nl-amis-logging subjects: - kind: ServiceAccount name: filebeat-serviceaccount namespace: nl-amis-logging roleRef: kind: ClusterRole name: filebeat-clusterrole apiGroup: rbac.authorization.k8s.io

- clusterrole-filebeat.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: filebeat-clusterrole namespace: nl-amis-logging rules: - apiGroups: [""] # "" indicates the core API group resources: - namespaces - pods verbs: - get - watch - list

- serviceaccount-filebeat.yaml

apiVersion: v1 kind: ServiceAccount metadata: name: filebeat-serviceaccount namespace: nl-amis-logging

Vagrantfile

I changed the content of Vagrantfile to:

[in bold, I highlighted the changes]

Vagrant.configure("2") do |config|

config.vm.box = "ubuntu/xenial64"

config.vm.define "ubuntu_minikube_helm_elastic" do |ubuntu_minikube_helm_elastic|

config.vm.network "forwarded_port",

guest: 8001,

host: 8001,

auto_correct: true

config.vm.network "forwarded_port",

guest: 5601,

host: 5601,

auto_correct: true

config.vm.network "forwarded_port",

guest: 9200,

host: 9200,

auto_correct: true

config.vm.network "forwarded_port",

guest: 9010,

host: 9010,

auto_correct: true

config.vm.network "forwarded_port",

guest: 9020,

host: 9020,

auto_correct: true

config.vm.network "forwarded_port",

guest: 9110,

host: 9110,

auto_correct: true

config.vm.provider "virtualbox" do |vb|

vb.name = "Ubuntu Minikube Helm Elastic Stack"

vb.memory = "8192"

vb.cpus = "1"

args = []

config.vm.provision "shell",

path: "scripts/docker.sh",

args: args

args = []

config.vm.provision "shell",

path: "scripts/minikube.sh",

args: args

args = []

config.vm.provision "shell",

path: "scripts/kubectl.sh",

args: args

args = []

config.vm.provision "shell",

path: "scripts/helm.sh",

args: args

args = []

config.vm.provision "shell",

path: "scripts/namespaces.sh",

args: args

args = []

config.vm.provision "shell",

path: "scripts/elasticsearch.sh",

args: args

args = []

config.vm.provision "shell",

path: "scripts/kibana.sh",

args: args

args = []

config.vm.provision "shell",

path: "scripts/filebeat.sh",

args: args

args = []

config.vm.provision "shell",

path: "scripts/mysql.sh",

args: args

args = []

config.vm.provision "shell",

path: "scripts/booksservices.sh",

args: args

end

end

end

In the scripts directory I created a file filebeat.sh with the following content:

#!/bin/bash

echo "**** Begin installing Filebeat"

#Create Helm chart

echo "**** Create Helm chart"

cd /vagrant

cd helmcharts

rm -rf /vagrant/helmcharts/filebeat-chart/*

helm create filebeat-chart

rm -rf /vagrant/helmcharts/filebeat-chart/templates/*

cp /vagrant/yaml/*filebeat.yaml /vagrant/helmcharts/filebeat-chart/templates

#Exiting: error loading config file: config file ("/etc/filebeat.yaml") can only be writable by the owner but the permissions are "-rwxrwxrwx" (to fix the permissions use: 'chmod go-w /etc/filebeat.yaml')

# Install Helm chart

cd /vagrant

cd helmcharts

echo "**** Install Helm chart filebeat-chart"

helm install ./filebeat-chart --name filebeat-release

# Wait 1 minute

echo "**** Waiting 1 minute ..."

sleep 60

echo "**** Check if a certain action (list) on a resource (pods) is allowed for a specific user (system:serviceaccount:nl-amis-logging:filebeat-serviceaccount) ****"

kubectl auth can-i list pods --as="system:serviceaccount:nl-amis-logging:filebeat-serviceaccount" --namespace nl-amis-logging

#List helm releases

echo "**** List helm releases"

helm list -d

#List pods

echo "**** List pods with namespace nl-amis-logging"

kubectl get pods --namespace nl-amis-logging

#echo "**** Determine the pod name of the filebeat-* pod in namespace nl-amis-logging"

#podName=$(kubectl get pods --namespace nl-amis-logging | grep filebeat- | grep -E -o "^\S*")

#echo "---$podName---"

#echo "**** Check the log file of the $podName pod in namespace nl-amis-logging"

#log=$(kubectl logs $podName --namespace nl-amis-logging | grep "Connection opened to Elasticsearch cluster")

#echo "---$log---"

echo "**** End installing Filebeat"

From the subdirectory named env on my Windows laptop, I opened a Windows Command Prompt (cmd) and typed: vagrant up

This command creates and configures guest machines according to your Vagrantfile.

[https://www.vagrantup.com/docs/cli/up.html]

My demo environment now looks like:

Via the Kubernetes Web UI (Dashboard) I checked that the Filebeat components were created (in the nl-amis-logging namespace):

- configmap-filebeat.yaml

Navigate to Config and Storage | Config Maps:

As mentioned earlier, the data field (with key-value pairs) contains the configuration data. In our case the ConfigMap holds information in the form of the content of a configuration file (filebeat.yml).

I tried another way to check the content of the configuration file.

I started a shell to the running container:

kubectl exec -it filebeat-daemonset-q5pb2 --namespace nl-amis-logging -- cat /etc/filebeat.yml

With the following output:

filebeat.inputs:

– type: container

paths:

– /var/log/containers/*.log

processors:

– add_kubernetes_metadata:

in_cluster: true

host: ${NODE_NAME}

matchers:

– logs_path:

logs_path: “/var/log/containers/”

processors:

– add_cloud_metadata:

– add_host_metadata:

cloud.id: ${ELASTIC_CLOUD_ID}

cloud.auth: ${ELASTIC_CLOUD_AUTH}

Remark:

The double dash symbol “–” is used to separate the arguments you want to pass to the command from the kubectl arguments.

[https://kubernetes.io/docs/tasks/debug-application-cluster/get-shell-running-container/]

- daemonset-filebeat.yaml

Navigate to Workloads | Daemons Sets:

- clusterrolebinding-filebeat.yaml

Not applicable.

- clusterrole-filebeat.yaml

Navigate to Cluster | Roles:

- serviceaccount-filebeat.yaml

Navigate to Config and Storage | Secrets:

Docker container log files

Every Docker container has a folder “/var/lib/docker/containers/<containerID>” on the host machine, which contains the log file “<containerID>-json.log”.

So, I needed to know the containerID for the Pods, related tot the booksservice, in my demo environment.

I got the information using several kubectl commands, leading to the following result:

| Pod | Namespace | Label key | Container | |||

| app | version | environment | containerID | name | ||

| booksservice-v1.0-68785bc6ff-cq769 | nl-amis-development | booksservice | 1.0 | development | docker://b234de2b689187f94792d45ac59fda0f2f6f6969c679d6a6ca5d8323ab8fd1c9 | booksservice-v1-0-container |

| booksservice-v1.0-68785bc6ff-x7mf8 | nl-amis-development | booksservice | 1.0 | development | docker://bff91eb16a15d0a2058919d7ce7b5077ea9d3f0542c7930f48b29ca1099a54ae | booksservice-v1-0-container |

| booksservice-v2.0-869c5bb47d-bwdc5 | nl-amis-development | booksservice | 2.0 | development | docker://4344b9a63ac54218dac88148203b2394ac973fe5d1f201a1a870f213e417122c | booksservice-v2-0-container |

| booksservice-v2.0-869c5bb47d-nwfgf | nl-amis-development | booksservice | 2.0 | development | docker://6b0e9a44932986cc6ae2353f28d4c9aff32e249abd0ac38ee22a27614aecb30f | booksservice-v2-0-container |

| booksservice-v1.0-5bcd5fddbd-cklsw | nl-amis-testing | booksservice | 1.0 | testing | docker://171b2526ae9d147dab7fb2180764d55c03c7ad706eca605ad5c849aafef5d38d | booksservice-v1-0-container |

| booksservice-v1.0-5bcd5fddbd-n9qkx | nl-amis-testing | booksservice | 1.0 | testing | docker://f7a9b8a4021073f8c7daba0000482f9f9356495beec7c8d49b9b45f0055f9c20 | booksservice-v1-0-container |

- Command to list all Pods

kubectl get pods --all-namespaces

With the following output:

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-576cbf47c7-jvbzp 1/1 Running 0 23h

kube-system coredns-576cbf47c7-mpl8t 1/1 Running 0 23h

kube-system etcd-minikube 1/1 Running 0 23h

kube-system kube-addon-manager-minikube 1/1 Running 0 23h

kube-system kube-apiserver-minikube 1/1 Running 0 23h

kube-system kube-controller-manager-minikube 1/1 Running 0 23h

kube-system kube-proxy-6nhhs 1/1 Running 0 23h

kube-system kube-scheduler-minikube 1/1 Running 0 23h

kube-system kubernetes-dashboard-5bff5f8fb8-p2m57 1/1 Running 0 23h

kube-system storage-provisioner 1/1 Running 0 23h

kube-system tiller-deploy-79c4c54bc4-vprfl 1/1 Running 0 23h

nl-amis-development booksservice-v1.0-68785bc6ff-cq769 1/1 Running 0 23h

nl-amis-development booksservice-v1.0-68785bc6ff-x7mf8 1/1 Running 0 23h

nl-amis-development booksservice-v2.0-869c5bb47d-bwdc5 1/1 Running 0 23h

nl-amis-development booksservice-v2.0-869c5bb47d-nwfgf 1/1 Running 0 23h

nl-amis-logging elasticsearch-6b46c44f7c-v569h 1/1 Running 0 23h

nl-amis-logging filebeat-daemonset-q5pb2 1/1 Running 0 23h

nl-amis-logging kibana-6f96d679c4-kb5qw 1/1 Running 0 23h

nl-amis-testing booksservice-v1.0-5bcd5fddbd-cklsw 1/1 Running 0 23h

nl-amis-testing booksservice-v1.0-5bcd5fddbd-n9qkx 1/1 Running 0 23h

nl-amis-testing mysql-64846c7974-w7mz9 1/1 Running 0 23h

- Command to get the YAML (with object information) for a particular Pod:

kubectl get pod -n nl-amis-development booksservice-v1.0-68785bc6ff-cq769 -o yaml

With the following output:

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: “2019-09-04T19:49:37Z”

generateName: booksservice-v1.0-68785bc6ff-

labels:

app: booksservice

environment: development

pod-template-hash: 68785bc6ff

version: “1.0”

name: booksservice-v1.0-68785bc6ff-cq769

namespace: nl-amis-development

ownerReferences:

– apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: booksservice-v1.0-68785bc6ff

uid: 207a1cd7-cf4d-11e9-95ef-023e591c269a

resourceVersion: “1711”

selfLink: /api/v1/namespaces/nl-amis-development/pods/booksservice-v1.0-68785bc6ff-cq769

uid: 208017d3-cf4d-11e9-95ef-023e591c269a

spec:

containers:

– env:

– name: spring.profiles.active

value: development

image: booksservice:v1.0

imagePullPolicy: IfNotPresent

name: booksservice-v1-0-container

ports:

– containerPort: 9090

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

– mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-4kz7x

readOnly: true

dnsPolicy: ClusterFirst

nodeName: minikube

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

– effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

– effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

– name: default-token-4kz7x

secret:

defaultMode: 420

secretName: default-token-4kz7x

status:

conditions:

– lastProbeTime: null

lastTransitionTime: “2019-09-04T19:49:37Z”

status: “True”

type: Initialized

– lastProbeTime: null

lastTransitionTime: “2019-09-04T19:49:42Z”

status: “True”

type: Ready

– lastProbeTime: null

lastTransitionTime: “2019-09-04T19:49:42Z”

status: “True”

type: ContainersReady

– lastProbeTime: null

lastTransitionTime: “2019-09-04T19:49:37Z”

status: “True”

type: PodScheduled

containerStatuses:

– containerID: docker://b234de2b689187f94792d45ac59fda0f2f6f6969c679d6a6ca5d8323ab8fd1c9

image: booksservice:v1.0

imageID: docker://sha256:296bfc231d3bbbe6954ad9a18c3fdfe2d6dcb81a84ee450d433449a63dda4928

lastState: {}

name: booksservice-v1-0-container

ready: true

restartCount: 0

state:

running:

startedAt: “2019-09-04T19:49:41Z”

hostIP: 10.0.2.15

phase: Running

podIP: 172.17.0.12

qosClass: BestEffort

startTime: “2019-09-04T19:49:37Z”

- Command which uses JSONPath expressions to filter on specific fields in the JSON object and format the output:

kubectl get pods --all-namespaces -o=jsonpath='{range .items[*]}[{.metadata.name}{"\t"},{.metadata.namespace}{"\t"},{.metadata.labels.app}{"\t"},{.metadata.labels.environment}{"\t"},{.metadata.labels.version}{"\t"},{range .status.containerStatuses[*]}{.containerID}{"\t"},{.name}]{"\n"}{end}{end}' |\sort

[https://kubernetes.io/docs/reference/kubectl/jsonpath/]

With the following output:

[booksservice-v1.0-5bcd5fddbd-cklsw ,nl-amis-testing ,booksservice ,testing ,1.0 ,docker://171b2526ae9d147dab7fb2180764d55c03c7ad706eca605ad5c849aafef5d38d ,booksservice-v1-0-container]

[booksservice-v1.0-5bcd5fddbd-n9qkx ,nl-amis-testing ,booksservice ,testing ,1.0 ,docker://f7a9b8a4021073f8c7daba0000482f9f9356495beec7c8d49b9b45f0055f9c20 ,booksservice-v1-0-container]

[booksservice-v1.0-68785bc6ff-cq769 ,nl-amis-development ,booksservice ,development ,1.0 ,docker://b234de2b689187f94792d45ac59fda0f2f6f6969c679d6a6ca5d8323ab8fd1c9 ,booksservice-v1-0-container]

[booksservice-v1.0-68785bc6ff-x7mf8 ,nl-amis-development ,booksservice ,development ,1.0 ,docker://bff91eb16a15d0a2058919d7ce7b5077ea9d3f0542c7930f48b29ca1099a54ae ,booksservice-v1-0-container]

[booksservice-v2.0-869c5bb47d-bwdc5 ,nl-amis-development ,booksservice ,development ,2.0 ,docker://4344b9a63ac54218dac88148203b2394ac973fe5d1f201a1a870f213e417122c ,booksservice-v2-0-container]

[booksservice-v2.0-869c5bb47d-nwfgf ,nl-amis-development ,booksservice ,development ,2.0 ,docker://6b0e9a44932986cc6ae2353f28d4c9aff32e249abd0ac38ee22a27614aecb30f ,booksservice-v2-0-container]

[coredns-576cbf47c7-jvbzp ,kube-system , , , ,docker://8214e5aafc1d034d03abadaf606f9b1a1303757ea4ba5d4fdb689456711e5fad ,coredns]

[coredns-576cbf47c7-mpl8t ,kube-system , , , ,docker://c2e03eb2b0a063120980b99e9b82067d2c72c82c2d86ad7092cd1cc6edbb54a7 ,coredns]

[elasticsearch-6b46c44f7c-v569h ,nl-amis-logging ,elasticsearch ,logging ,7.0.0 ,docker://b8bdbfe91a6aff0f11ef6d62eb20fe186a7fc8eec501b81d31ad327f45009e20

,elasticsearch-container]

[etcd-minikube ,kube-system , , , ,docker://74c09a3c714c19fd6cebf1990836cc8e8427e89cea079f612679999874faaa60 ,etcd]

[filebeat-daemonset-q5pb2 ,nl-amis-logging ,filebeat ,logging ,1.0 ,docker://2c65d0c5b03a113fb1d1c38b25feb9379ee25840dbc7210f572c52e8b6df610c

,filebeat]

[kibana-6f96d679c4-kb5qw ,nl-amis-logging ,kibana ,logging ,7.0.0 ,docker://7aa9e0be446db37ad5d0ff76d5c7a8733559f3ce5b9766871c75a56719119c68 ,kibana-container]

[kube-addon-manager-minikube ,kube-system , , ,v8.6 ,docker://d7918eb9fdaa79b9a202f0526caaaa36e8fa531a7fc0a83a1ee513343f6743ea ,kube-addon-manager]

[kube-apiserver-minikube ,kube-system , , , ,docker://d57c1cea8886da34b98df15806675d08196056460c8887655a270ca98261a543 ,kube-apiserver]

[kube-controller-manager-minikube ,kube-system , , , ,docker://3312a17ec93a141143a38779e156b96b99d1b1e9f52c27011a9cff5e510410c4 ,kube-controller-manager]

[kube-proxy-6nhhs ,kube-system , , , ,docker://36d6f50d14a2854208a849da8cff1ea2fe9217b0b19d46f0aead7aff012e24a1 ,kube-proxy]

[kubernetes-dashboard-5bff5f8fb8-p2m57 ,kube-system ,kubernetes-dashboard , ,v1.10.1 ,docker://09dbb37fd83b5ed1a1e23c43403fac8d258815c67a6b791e5c5714ba69ca3b02 ,kubernetes-dashboard]

[kube-scheduler-minikube ,kube-system , , , ,docker://956c43ecd2bb3154720055d7f1abf53b2d9287a265f5236179bb232bb1a0f55d ,kube-scheduler]

[mysql-64846c7974-w7mz9 ,nl-amis-testing ,mysql ,testing ,1.0 ,docker://3d6ce5328e7ebcbdf012791fc87d45374afe137439ff13c28fa75ff5fc408f1d ,mysql]

[storage-provisioner ,kube-system , , , ,docker://9d01d6f19dc28d7104282ccec672b211842cff9f813ef586790d8882e0ed20c4 ,storage-provisioner]

[tiller-deploy-79c4c54bc4-vprfl ,kube-system ,helm , , ,docker://5c36d2f20b5be0ddffae6844837b3eaf7b1ad5356a5203ea262c547c2cf80394 ,tiller]

Investigating the content of the log file for a particular Pod

There are several ways in which you can have a look at the content of the log file for a particular Pod (let’s say for the first Pod in the table shown earlier). For example:

- Via kubectl

kubectl --namespace=nl-amis-development logs booksservice-v1.0-68785bc6ff-cq769 --tail=20

- Via the Kubernetes Web UI (Dashboard)

Navigate to Workloads | Pods:

Select Pod booksservice-v1.0-68785bc6ff-cq769 and click on Logs (hamburger menu)

- Via the directory /var/lib/docker/containers

Every Docker container has a folder “/var/lib/docker/containers/<containerID>” on the host machine, which contains the log file “<containerID>-json.log”.

So, in my case, the log file can be found in the directory:

/var/lib/docker/containers/b234de2b689187f94792d45ac59fda0f2f6f6969c679d6a6ca5d8323ab8fd1c9

I listed the files in the directory:

sudo ls -latr /var/lib/docker/containers/b234de2b689187f94792d45ac59fda0f2f6f6969c679d6a6ca5d8323ab8fd1c9

With the following output:

total 40

drwx—— 2 root root 4096 Sep 4 19:49 checkpoints

drwx—— 2 root root 4096 Sep 4 19:49 mounts

drwx—— 45 root root 4096 Sep 4 19:49 ..

-rw-r–r– 1 root root 2015 Sep 4 19:49 hostconfig.json

-rw——- 1 root root 6009 Sep 4 19:49 config.v2.json

drwx—— 4 root root 4096 Sep 4 19:49 .

-rw-r—– 1 root root 10078 Sep 4 19:51 b234de2b689187f94792d45ac59fda0f2f6f6969c679d6a6ca5d8323ab8fd1c9-json.log

I showed the last 20 lines of the content of the log file:

sudo tail -n 20 /var/lib/docker/containers/b234de2b689187f94792d45ac59fda0f2f6f6969c679d6a6ca5d8323ab8fd1c9/b234de2b689187f94792d45ac59fda0f2f6f6969c679d6a6ca5d8323ab8fd1c9-json.log

- Via the directory /var/log/containers

I listed the files in the directory:

sudo ls -latr /var/log/containers

With the following output:

total 88

drwxrwxr-x 9 root syslog 4096 Sep 4 19:34 ..

lrwxrwxrwx 1 root root 57 Sep 4 19:34 etcd-minikube_kube-system_etcd-74c09a3c714c19fd6cebf1990836cc8e8427e89cea079f612679999874faaa60.log -> /var/log/pods/400930335566057521570dcbaf3dbb0b/etcd/0.log

lrwxrwxrwx 1 root root 67 Sep 4 19:34 kube-scheduler-minikube_kube-system_kube-scheduler-956c43ecd2bb3154720055d7f1abf53b2d9287a265f5236179bb232bb1a0f55d.log -> /var/log/pods/e1b3e16379a55d4c355fa42bc75eb023/kube-scheduler/0.log

lrwxrwxrwx 1 root root 67 Sep 4 19:34 kube-apiserver-minikube_kube-system_kube-apiserver-d57c1cea8886da34b98df15806675d08196056460c8887655a270ca98261a543.log -> /var/log/pods/56a6d3191989178a49a5c197f01f4179/kube-apiserver/0.log

lrwxrwxrwx 1 root root 76 Sep 4 19:34 kube-controller-manager-minikube_kube-system_kube-controller-manager-3312a17ec93a141143a38779e156b96b99d1b1e9f52c27011a9cff5e510410c4.log -> /var/log/pods/8ed2b4b6a766901d30591a648262d9f9/kube-controller-manager/0.log

lrwxrwxrwx 1 root root 71 Sep 4 19:34 kube-addon-manager-minikube_kube-system_kube-addon-manager-d7918eb9fdaa79b9a202f0526caaaa36e8fa531a7fc0a83a1ee513343f6743ea.log -> /var/log/pods/d682efea6fd7d1c11b13f78e8c81af08/kube-addon-manager/0.log

lrwxrwxrwx 1 root root 64 Sep 4 19:35 coredns-576cbf47c7-jvbzp_kube-system_coredns-8214e5aafc1d034d03abadaf606f9b1a1303757ea4ba5d4fdb689456711e5fad.log -> /var/log/pods/1c73e88d-cf4b-11e9-95ef-023e591c269a/coredns/0.log

lrwxrwxrwx 1 root root 64 Sep 4 19:35 coredns-576cbf47c7-mpl8t_kube-system_coredns-c2e03eb2b0a063120980b99e9b82067d2c72c82c2d86ad7092cd1cc6edbb54a7.log -> /var/log/pods/1cbca0a0-cf4b-11e9-95ef-023e591c269a/coredns/0.log

lrwxrwxrwx 1 root root 67 Sep 4 19:35 kube-proxy-6nhhs_kube-system_kube-proxy-36d6f50d14a2854208a849da8cff1ea2fe9217b0b19d46f0aead7aff012e24a1.log -> /var/log/pods/1c68b964-cf4b-11e9-95ef-023e591c269a/kube-proxy/0.log

lrwxrwxrwx 1 root root 77 Sep 4 19:36 kubernetes-dashboard-5bff5f8fb8-p2m57_kube-system_kubernetes-dashboard-09dbb37fd83b5ed1a1e23c43403fac8d258815c67a6b791e5c5714ba69ca3b02.log -> /var/log/pods/1eb91a11-cf4b-11e9-95ef-023e591c269a/kubernetes-dashboard/0.log

lrwxrwxrwx 1 root root 76 Sep 4 19:36 storage-provisioner_kube-system_storage-provisioner-9d01d6f19dc28d7104282ccec672b211842cff9f813ef586790d8882e0ed20c4.log -> /var/log/pods/1f7a6b51-cf4b-11e9-95ef-023e591c269a/storage-provisioner/0.log

lrwxrwxrwx 1 root root 63 Sep 4 19:36 tiller-deploy-79c4c54bc4-vprfl_kube-system_tiller-5c36d2f20b5be0ddffae6844837b3eaf7b1ad5356a5203ea262c547c2cf80394.log -> /var/log/pods/3a175e09-cf4b-11e9-95ef-023e591c269a/tiller/0.log

lrwxrwxrwx 1 root root 80 Sep 4 19:40 elasticsearch-6b46c44f7c-v569h_nl-amis-logging_elasticsearch-container-b8bdbfe91a6aff0f11ef6d62eb20fe186a7fc8eec501b81d31ad327f45009e20.log -> /var/log/pods/a6d793d3-cf4b-11e9-95ef-023e591c269a/elasticsearch-container/0.log

lrwxrwxrwx 1 root root 73 Sep 4 19:43 kibana-6f96d679c4-kb5qw_nl-amis-logging_kibana-container-7aa9e0be446db37ad5d0ff76d5c7a8733559f3ce5b9766871c75a56719119c68.log -> /var/log/pods/0148ec4e-cf4c-11e9-95ef-023e591c269a/kibana-container/0.log

lrwxrwxrwx 1 root root 65 Sep 4 19:44 filebeat-daemonset-q5pb2_nl-amis-logging_filebeat-2c65d0c5b03a113fb1d1c38b25feb9379ee25840dbc7210f572c52e8b6df610c.log -> /var/log/pods/5baef596-cf4c-11e9-95ef-023e591c269a/filebeat/0.log

lrwxrwxrwx 1 root root 62 Sep 4 19:45 mysql-64846c7974-w7mz9_nl-amis-testing_mysql-3d6ce5328e7ebcbdf012791fc87d45374afe137439ff13c28fa75ff5fc408f1d.log -> /var/log/pods/85319a39-cf4c-11e9-95ef-023e591c269a/mysql/0.log

lrwxrwxrwx 1 root root 84 Sep 4 19:49 booksservice-v1.0-5bcd5fddbd-cklsw_nl-amis-testing_booksservice-v1-0-container-171b2526ae9d147dab7fb2180764d55c03c7ad706eca605ad5c849aafef5d38d.log -> /var/log/pods/20936e0c-cf4d-11e9-95ef-023e591c269a/booksservice-v1-0-container/0.log

lrwxrwxrwx 1 root root 84 Sep 4 19:49 booksservice-v1.0-5bcd5fddbd-n9qkx_nl-amis-testing_booksservice-v1-0-container-f7a9b8a4021073f8c7daba0000482f9f9356495beec7c8d49b9b45f0055f9c20.log -> /var/log/pods/209cc8b9-cf4d-11e9-95ef-023e591c269a/booksservice-v1-0-container/0.log

lrwxrwxrwx 1 root root 84 Sep 4 19:49 booksservice-v1.0-68785bc6ff-x7mf8_nl-amis-development_booksservice-v1-0-container-bff91eb16a15d0a2058919d7ce7b5077ea9d3f0542c7930f48b29ca1099a54ae.log -> /var/log/pods/20897a07-cf4d-11e9-95ef-023e591c269a/booksservice-v1-0-container/0.log

lrwxrwxrwx 1 root root 84 Sep 4 19:49 booksservice-v2.0-869c5bb47d-bwdc5_nl-amis-development_booksservice-v2-0-container-4344b9a63ac54218dac88148203b2394ac973fe5d1f201a1a870f213e417122c.log -> /var/log/pods/20800711-cf4d-11e9-95ef-023e591c269a/booksservice-v2-0-container/0.log

lrwxrwxrwx 1 root root 84 Sep 4 19:49 booksservice-v2.0-869c5bb47d-nwfgf_nl-amis-development_booksservice-v2-0-container-6b0e9a44932986cc6ae2353f28d4c9aff32e249abd0ac38ee22a27614aecb30f.log -> /var/log/pods/2088e3d9-cf4d-11e9-95ef-023e591c269a/booksservice-v2-0-container/0.log

lrwxrwxrwx 1 root root 84 Sep 4 19:49 booksservice-v1.0-68785bc6ff-cq769_nl-amis-development_booksservice-v1-0-container-b234de2b689187f94792d45ac59fda0f2f6f6969c679d6a6ca5d8323ab8fd1c9.log -> /var/log/pods/208017d3-cf4d-11e9-95ef-023e591c269a/booksservice-v1-0-container/0.log

drwxr-xr-x 2 root root 4096 Sep 4 19:49 .

I showed the last 20 lines of the content of the log file:

sudo tail -n 20 /var/log/containers/booksservice-v1.0-68785bc6ff-cq769_nl-amis-development_booksservice-v1-0-container-b234de2b689187f94792d45ac59fda0f2f6f6969c679d6a6ca5d8323ab8fd1c9.log

- Via the directory /var/log/pods

I listed the files in the directory:

sudo ls -latr /var/log/pods

With the following output:

total 92

drwxrwxr-x 9 root syslog 4096 Sep 4 19:34 ..

drwxr-xr-x 3 root root 4096 Sep 4 19:34 400930335566057521570dcbaf3dbb0b

drwxr-xr-x 3 root root 4096 Sep 4 19:34 56a6d3191989178a49a5c197f01f4179

drwxr-xr-x 3 root root 4096 Sep 4 19:34 e1b3e16379a55d4c355fa42bc75eb023

drwxr-xr-x 3 root root 4096 Sep 4 19:34 8ed2b4b6a766901d30591a648262d9f9

drwxr-xr-x 3 root root 4096 Sep 4 19:34 d682efea6fd7d1c11b13f78e8c81af08

drwxr-xr-x 3 root root 4096 Sep 4 19:35 1c68b964-cf4b-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:35 1c73e88d-cf4b-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:35 1cbca0a0-cf4b-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:36 1eb91a11-cf4b-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:36 1f7a6b51-cf4b-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:36 3a175e09-cf4b-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:40 a6d793d3-cf4b-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:43 0148ec4e-cf4c-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:44 5baef596-cf4c-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:45 85319a39-cf4c-11e9-95ef-023e591c269a

drwxr-xr-x 23 root root 4096 Sep 4 19:49 .

drwxr-xr-x 3 root root 4096 Sep 4 19:49 20936e0c-cf4d-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:49 208017d3-cf4d-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:49 209cc8b9-cf4d-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:49 20897a07-cf4d-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:49 2088e3d9-cf4d-11e9-95ef-023e591c269a

drwxr-xr-x 3 root root 4096 Sep 4 19:49 20800711-cf4d-11e9-95ef-023e591c269a

I showed the last 20 lines of the content of the log file:

sudo tail -n 20 /var/log/pods/208017d3-cf4d-11e9-95ef-023e591c269a/booksservice-v1-0-container/0.log

Creating the ConfigMap from a file

In line with what I did when I used Fluentd (see previous article), I wanted to create the ConfigMap from a file. So, I changed the default configuration file location ( /etc/filebeat.yml) to: /etc/custom-config/filebeat.yml.

[https://technology.amis.nl/2019/04/23/using-vagrant-and-shell-scripts-to-further-automate-setting-up-my-demo-environment-from-scratch-including-elasticsearch-fluentd-and-kibana-efk-within-minikube/]

There for, in the vagrant directory I created a subdirectory structure configmaps/configmap-filebeat with a file filebeat.yml with the following content:

filebeat.inputs:

- type: container

paths:

- /var/log/containers/*.log

processors:

- add_kubernetes_metadata:

in_cluster: true

host: ${NODE_NAME}

matchers:

- logs_path:

logs_path: "/var/log/containers/"

# To enable hints based autodiscover, remove `filebeat.inputs` configuration and uncomment this:

#filebeat.autodiscover:

# providers:

# - type: kubernetes

# host: ${NODE_NAME}

# hints.enabled: true

# hints.default_config:

# type: container

# paths:

# - /var/log/containers/*${data.kubernetes.container.id}.log

processors:

- add_cloud_metadata:

- add_host_metadata:

cloud.id: ${ELASTIC_CLOUD_ID}

cloud.auth: ${ELASTIC_CLOUD_AUTH}

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

username: ${ELASTICSEARCH_USERNAME}

password: ${ELASTICSEARCH_PASSWORD}

Remark:

For more information about the content of this config file, I kindly refer you to the FileBeat documentation, for example:

https://www.elastic.co/guide/en/beats/filebeat/master/add-kubernetes-metadata.html

I created a ConfigMap that holds the filebeat config file using:

kubectl create configmap filebeat-configmap --from-file=/vagrant/configmaps/configmap-filebeat --namespace nl-amis-logging

Next, I added labels to the ConfigMap using:

kubectl label configmap filebeat-configmap --namespace nl-amis-logging app=filebeat kubectl label configmap filebeat-configmap --namespace nl-amis-logging version="1.0" kubectl label configmap filebeat-configmap --namespace nl-amis-logging environment=logging

A ConfigMap can be created via a yaml file, but not if you want to use the from-file option, because kubernetes isn’t aware of the local file’s path.

[https://stackoverflow.com/questions/51268488/kubernetes-configmap-set-from-file-in-yaml-configuration]

You must create a ConfigMap before referencing it in a Pod specification (unless you mark the ConfigMap as “optional”). If you reference a ConfigMap that doesn’t exist, the Pod won’t start.

ConfigMaps reside in a specific namespace. A ConfigMap can only be referenced by pods residing in the same namespace.

[https://kubernetes.io/docs/tasks/configure-pod-container/configure-pod-configmap/]

When you create a ConfigMap using –from-file, the filename becomes a key stored in the data section of the ConfigMap. The file contents become the key’s value.

[https://kubernetes.io/docs/tasks/configure-pod-container/configure-pod-configmap/#add-configmap-data-to-a-volume]

On my Windows laptop, in the yaml directory, I deleted the file configmap-filebeat.yaml.

In the scripts directory I changed the file filebeat.sh to have the following content:

#!/bin/bash

echo "**** Begin installing Filebeat"

#Create ConfigMap before creating DaemonSet

kubectl create configmap filebeat-configmap --from-file=/vagrant/configmaps/configmap-filebeat --namespace nl-amis-logging

#Label ConfigMap

kubectl label configmap filebeat-configmap --namespace nl-amis-logging app=filebeat

kubectl label configmap filebeat-configmap --namespace nl-amis-logging version="1.0"

kubectl label configmap filebeat-configmap --namespace nl-amis-logging environment=logging

#List configmaps

echo "**** List configmap filebeat-configmap with namespace nl-amis-logging"

#kubectl describe configmaps filebeat-configmap --namespace nl-amis-logging

kubectl get configmaps filebeat-configmap --namespace nl-amis-logging -o yaml

#Create Helm chart

echo "**** Create Helm chart"

cd /vagrant

cd helmcharts

rm -rf /vagrant/helmcharts/filebeat-chart/*

helm create filebeat-chart

rm -rf /vagrant/helmcharts/filebeat-chart/templates/*

cp /vagrant/yaml/*filebeat.yaml /vagrant/helmcharts/filebeat-chart/templates

#Exiting: error loading config file: config file ("/etc/filebeat.yaml") can only be writable by the owner but the permissions are "-rwxrwxrwx" (to fix the permissions use: 'chmod go-w /etc/filebeat.yaml')

# Install Helm chart

cd /vagrant

cd helmcharts

echo "**** Install Helm chart filebeat-chart"

helm install ./filebeat-chart --name filebeat-release

# Wait 1 minute

echo "**** Waiting 1 minute ..."

sleep 60

echo "**** Check if a certain action (list) on a resource (pods) is allowed for a specific user (system:serviceaccount:nl-amis-logging:filebeat-serviceaccount) ****"

kubectl auth can-i list pods --as="system:serviceaccount:nl-amis-logging:filebeat-serviceaccount" --namespace nl-amis-logging

#List helm releases

echo "**** List helm releases"

helm list -d

#List pods

echo "**** List pods with namespace nl-amis-logging"

kubectl get pods --namespace nl-amis-logging

#echo "**** Determine the pod name of the filebeat-* pod in namespace nl-amis-logging"

#podName=$(kubectl get pods --namespace nl-amis-logging | grep filebeat- | grep -E -o "^\S*")

#echo "---$podName---"

#echo "**** Check the log file of the $podName pod in namespace nl-amis-logging"

#log=$(kubectl logs $podName --namespace nl-amis-logging | grep "Connection opened to Elasticsearch cluster")

#echo "---$log---"

echo "**** End installing Filebeat"

In the yaml directory I changed the file daemonset-filebeat.yaml to have the following content:

apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: filebeat-daemonset namespace: nl-amis-logging labels: app: filebeat version: "1.0" environment: logging spec: template: metadata: labels: app: filebeat version: "1.0" environment: logging spec: serviceAccountName: filebeat-serviceaccount terminationGracePeriodSeconds: 30 hostNetwork: true dnsPolicy: ClusterFirstWithHostNet containers: - name: filebeat image: docker.elastic.co/beats/filebeat:7.3.1 args: [ "-c", " /etc/custom-config/filebeat.yml", "-e", ] env: - name: ELASTICSEARCH_HOST value: "elasticsearch-service.nl-amis-logging" - name: ELASTICSEARCH_PORT value: "9200" - name: ELASTICSEARCH_USERNAME value: elastic - name: ELASTICSEARCH_PASSWORD value: changeme - name: ELASTIC_CLOUD_ID value: - name: ELASTIC_CLOUD_AUTH value: - name: NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName securityContext: runAsUser: 0 # If using Red Hat OpenShift uncomment this: #privileged: true resources: limits: memory: 200Mi requests: cpu: 100m memory: 100Mi volumeMounts: - name: filebeat-config-volume mountPath: /etc/custom-config - name: data mountPath: /usr/share/filebeat/data - name: varlibdockercontainers mountPath: /var/lib/docker/containers readOnly: true - name: varlog mountPath: /var/log readOnly: true volumes: - name: filebeat-config-volume configMap: name: filebeat-configmap - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers - name: varlog hostPath: path: /var/log # data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart - name: data hostPath: path: /var/lib/filebeat-data type: DirectoryOrCreate

So, because I made some changes, I deleted and re-installed the release of Filebeat (via Helm, the package manager for Kubernetes).

helm del --purge filebeat-release release "filebeat-release" deleted

Next, I started the shell script:

cd /vagrant/scripts ./filebeat.sh

==> v1/ServiceAccount

NAME SECRETS AGE

filebeat-serviceaccount 1 0s

==> v1beta1/ClusterRole

NAME AGE

filebeat-clusterrole 0s

==> v1beta1/ClusterRoleBinding

NAME AGE

filebeat-clusterrolebinding 0s

==> v1beta1/DaemonSet

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

filebeat-daemonset 1 1 0 1 0 0s

As can be seen in daemonset-filebeat.yaml, Filebeat uses the hostPath /var/lib/filebeat-data to persist internal data.

apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: filebeat-daemonset namespace: nl-amis-logging labels: app: filebeat version: "1.0" environment: logging spec: … # data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart - name: data hostPath: path: /var/lib/filebeat-data type: DirectoryOrCreate

On the host (my virtual machine), I listed the files in the directory:

vagrant@ubuntu-xenial:/vagrant/scripts$ cd /var/lib/filebeat-data vagrant@ubuntu-xenial:/var/lib/filebeat-data$ ls -latr

With the following output:

total 16

drwxr-xr-x 47 root root 4096 Sep 4 19:44 ..

-rw——- 1 root root 48 Sep 4 19:44 meta.json

drwxr-x— 3 root root 4096 Sep 4 19:44 registry

drwxr-xr-x 3 root root 4096 Sep 4 19:44 .

I started a shell to the running container:

kubectl exec -it filebeat-daemonset-bzz86 --namespace nl-amis-logging -- ls -latr

I changed the directory:

kubectl exec -it filebeat-daemonset-bzz86 --namespace nl-amis-logging -- cd data

I listed the files in the directory:

vagrant@ubuntu-xenial:/var/lib/filebeat-data$ ls -latr

With the following output:

total 16

drwxr-xr-x 47 root root 4096 Sep 4 19:44 ..

-rw——- 1 root root 48 Sep 4 19:44 meta.json

drwxr-x— 3 root root 4096 Sep 4 19:44 registry

drwxr-xr-x 3 root root 4096 Sep 4 19:44 .

Remark:

The double dash symbol “–” is used to separate the arguments you want to pass to the command from the kubectl arguments.

[https://kubernetes.io/docs/tasks/debug-application-cluster/get-shell-running-container/]

Elasticsearch index

In the Kibana Dashboard via Management | Kibana | Index Patterns you can create an index pattern.

Kibana uses index patterns to retrieve data from Elasticsearch indices for things like visualizations.

[http://localhost:5601/app/kibana#/management/kibana/index_pattern?_g=()]

In the field “Index pattern” I entered filebeat*. The index pattern matched 1 index. Next, I clicked on button “Next step”.

In the field “Time Filter field name” I entered @timestamp.

The Time Filter will use this field to filter your data by time.

You can choose not to have a time field, but you will not be able to narrow down your data by a time range.

[http://localhost:5601/app/kibana#/management/kibana/index_pattern?_g=()]

Next, I clicked on button “Create index pattern”.

The Kibana index pattern filebeat* was created, with 1019 fields.

This page lists every field in the filebeat* index and the field’s associated core type as recorded by Elasticsearch. To change a field type, use the Elasticsearch Mapping API.

Kibana Dashboard, Discover

In the Kibana Dashboard via Discover you can see the log files.

Let’s shortly focus on the first hit.

Via a click on icon “>”, the document is expanded.

Kibana Dashboard, Visualize, creating a visualization

In the Kibana Dashboard via Visualize you can create a visualization.

I clicked on button “Create a visualization” and selected “Pie” as the type for the visualization.

As a source I chose the Index pattern, I created earlier.

After I selected filebeat* the following became visible.

In tab “Data”, in the Split Slices part, in the field “Aggregation” I selected Terms and in “Field” I selected kubernetes.container.name and left the other default settings as they were.

Then I clicked on the icon “Apply changes”, with the following result:

In tab “Options”, I selected the checkbox “Show Labels” and left the other default settings as they were.

Then I clicked on the icon “Apply changes”, with the following result:

So, the container names from the log files from the last 15 minutes are shown.

Postman

Remember that on my Windows laptop, I also wanted to be able to use Postman (for sending requests), via port forwarding this was made possible.

So, I used Postman to add books to and retrieve books from the book catalog. I did this for version 1.0 and 2.0 of the BooksService application.

From Postman I invoked a request named “GetAllBooksRequest” (with method “POST” and URL “http://locahost:9010/books”).

This concerns version 1.0 in the DEV environment.

A response with “Status 200 OK” was shown (with 4 books being retrieved):

From Postman I invoked a request named “GetAllBooksRequest” (with method “POST” and URL http://locahost:9020/books).

This concerns version 2.0 in the DEV environment.

A response with “Status 200 OK” was shown (with 4 books being retrieved):

From Postman I invoked a request named “GetAllBooksRequest” (with method “POST” and URL “http://locahost:9110/books”).

This concerns version 1.0 in the TST environment.

A response with “Status 200 OK” was shown (with 4 books being retrieved):

Remember, each time the getAllBooks method is called, this becomes visible in the container log file.

Kibana Dashboard, Visualize, creating a visualization

In the previously created visualization, I clicked on button “Refresh”. Then the booksservice containers became visible also.

Then I saved this Visualization, via a click on button “Save”.

In the pop-up “Save visualization”, in the field “Title” I entered containers_visualization_1. Next, I clicked on button “Confirm Save”.

In the left top of the screen this title then becomes visible.

Remark:

All the Saved Objects can be seen in the Kibana Dashboard via Management | Kibana | Saved Objects.

Filtering and enhancing the exported data

Filebeat provides a couple of options for filtering and enhancing exported data.

You can configure each input to include or exclude specific lines or files. This allows you to specify different filtering criteria for each input.

[https://www.elastic.co/guide/en/beats/filebeat/current/filtering-and-enhancing-data.html]

Filtering the exported data

I wanted to focus on the log files from the mysql and booksservice containers, so I needed to change the filter.

Remember the output from listing the directory /var/log/containers:

total 88

drwxrwxr-x 9 root syslog 4096 Sep 4 19:34 ..

…

lrwxrwxrwx 1 root root 65 Sep 4 19:44 filebeat-daemonset-q5pb2_nl-amis-logging_filebeat-2c65d0c5b03a113fb1d1c38b25feb9379ee25840dbc7210f572c52e8b6df610c.log -> /var/log/pods/5baef596-cf4c-11e9-95ef-023e591c269a/filebeat/0.log

lrwxrwxrwx 1 root root 62 Sep 4 19:45 mysql-64846c7974-w7mz9_nl-amis-testing_mysql-3d6ce5328e7ebcbdf012791fc87d45374afe137439ff13c28fa75ff5fc408f1d.log -> /var/log/pods/85319a39-cf4c-11e9-95ef-023e591c269a/mysql/0.log

lrwxrwxrwx 1 root root 84 Sep 4 19:49 booksservice-v1.0-5bcd5fddbd-cklsw_nl-amis-testing_booksservice-v1-0-container-171b2526ae9d147dab7fb2180764d55c03c7ad706eca605ad5c849aafef5d38d.log -> /var/log/pods/20936e0c-cf4d-11e9-95ef-023e591c269a/booksservice-v1-0-container/0.log

lrwxrwxrwx 1 root root 84 Sep 4 19:49 booksservice-v1.0-5bcd5fddbd-n9qkx_nl-amis-testing_booksservice-v1-0-container-f7a9b8a4021073f8c7daba0000482f9f9356495beec7c8d49b9b45f0055f9c20.log -> /var/log/pods/209cc8b9-cf4d-11e9-95ef-023e591c269a/booksservice-v1-0-container/0.log

lrwxrwxrwx 1 root root 84 Sep 4 19:49 booksservice-v1.0-68785bc6ff-x7mf8_nl-amis-development_booksservice-v1-0-container-bff91eb16a15d0a2058919d7ce7b5077ea9d3f0542c7930f48b29ca1099a54ae.log -> /var/log/pods/20897a07-cf4d-11e9-95ef-023e591c269a/booksservice-v1-0-container/0.log

lrwxrwxrwx 1 root root 84 Sep 4 19:49 booksservice-v2.0-869c5bb47d-bwdc5_nl-amis-development_booksservice-v2-0-container-4344b9a63ac54218dac88148203b2394ac973fe5d1f201a1a870f213e417122c.log -> /var/log/pods/20800711-cf4d-11e9-95ef-023e591c269a/booksservice-v2-0-container/0.log

lrwxrwxrwx 1 root root 84 Sep 4 19:49 booksservice-v2.0-869c5bb47d-nwfgf_nl-amis-development_booksservice-v2-0-container-6b0e9a44932986cc6ae2353f28d4c9aff32e249abd0ac38ee22a27614aecb30f.log -> /var/log/pods/2088e3d9-cf4d-11e9-95ef-023e591c269a/booksservice-v2-0-container/0.log

lrwxrwxrwx 1 root root 84 Sep 4 19:49 booksservice-v1.0-68785bc6ff-cq769_nl-amis-development_booksservice-v1-0-container-b234de2b689187f94792d45ac59fda0f2f6f6969c679d6a6ca5d8323ab8fd1c9.log -> /var/log/pods/208017d3-cf4d-11e9-95ef-023e591c269a/booksservice-v1-0-container/0.log

drwxr-xr-x 2 root root 4096 Sep 4 19:49 .

Based on this output, in the subdirectory configmaps/configmap-filebeat, I changed the file filebeat.yml to the following content:

filebeat.inputs:

- type: container

paths:

- /var/log/containers/mysql*.log

- /var/log/containers/booksservice*.log

processors:

- add_kubernetes_metadata:

in_cluster: true

host: ${NODE_NAME}

matchers:

- logs_path:

logs_path: "/var/log/containers/"

# To enable hints based autodiscover, remove `filebeat.inputs` configuration and uncomment this:

#filebeat.autodiscover:

# providers:

# - type: kubernetes

# host: ${NODE_NAME}

# hints.enabled: true

# hints.default_config:

# type: container

# paths:

# - /var/log/containers/*${data.kubernetes.container.id}.log

processors:

- add_cloud_metadata:

- add_host_metadata:

cloud.id: ${ELASTIC_CLOUD_ID}

cloud.auth: ${ELASTIC_CLOUD_AUTH}

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

username: ${ELASTICSEARCH_USERNAME}

password: ${ELASTICSEARCH_PASSWORD}

So, because I made some changes, I deleted and re-installed the release of Filebeat (via Helm, the package manager for Kubernetes) and the ConfigMap.

helm del --purge filebeat-release release "filebeat-release" deleted

kubectl --namespace=nl-amis-logging delete configmap filebeat-configmap configmap "filebeat-configmap" deleted

Next, I started the shell script:

cd /vagrant/scripts ./filebeat.sh

With the following output:

**** Begin installing Filebeat

configmap/filebeat-configmap created

configmap/filebeat-configmap labeled

configmap/filebeat-configmap labeled

configmap/filebeat-configmap labeled

**** List configmap filebeat-configmap with namespace nl-amis-logging

apiVersion: v1

data:

filebeat.yml: “filebeat.inputs:\r\n- type: container\r\n paths:\r\n – /var/log/containers/mysql*.log\r\n

\ – /var/log/containers/booksservice*.log\r\n processors:\r\n – add_kubernetes_metadata:\r\n

\ in_cluster: true\r\n host: ${NODE_NAME}\r\n matchers:\r\n

\ – logs_path:\r\n logs_path: \”/var/log/containers/\”\r\n\r\n#

To enable hints based autodiscover, remove `filebeat.inputs` configuration and

uncomment this:\r\n#filebeat.autodiscover:\r\n# providers:\r\n# – type: kubernetes\r\n#

\ host: ${NODE_NAME}\r\n# hints.enabled: true\r\n# hints.default_config:\r\n#

\ type: container\r\n# paths:\r\n# – /var/log/containers/*${data.kubernetes.container.id}.log\r\n\r\nprocessors:\r\n

\ – add_cloud_metadata:\r\n – add_host_metadata:\r\n\r\ncloud.id: ${ELASTIC_CLOUD_ID}\r\ncloud.auth:

${ELASTIC_CLOUD_AUTH}\r\n\r\noutput.elasticsearch:\r\n hosts: [‘${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}’]\r\n

\ username: ${ELASTICSEARCH_USERNAME}\r\n password: ${ELASTICSEARCH_PASSWORD}”

kind: ConfigMap

metadata:

creationTimestamp: “2019-09-12T12:28:26Z”

labels:

app: filebeat

environment: logging

version: “1.0”

name: filebeat-configmap

namespace: nl-amis-logging

resourceVersion: “53891”

selfLink: /api/v1/namespaces/nl-amis-logging/configmaps/filebeat-configmap

uid: d1f26a17-d558-11e9-95ef-023e591c269a

**** Create Helm chart

Creating filebeat-chart

**** Install Helm chart filebeat-chart

NAME: filebeat-release

LAST DEPLOYED: Thu Sep 12 12:28:27 2019

NAMESPACE: default

STATUS: DEPLOYED

==> v1/ServiceAccount

NAME SECRETS AGE

filebeat-serviceaccount 1 0s

==> v1beta1/ClusterRole

NAME AGE

filebeat-clusterrole 0s

==> v1beta1/ClusterRoleBinding

NAME AGE

filebeat-clusterrolebinding 0s

==> v1beta1/DaemonSet

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

filebeat-daemonset 1 1 0 1 0 0s

Kibana Dashboard, Discover

After a while (longer than 15 minutes), when I looked in the Kibana Dashboard via Discover there were no results found.

So, the filter seemed to work. To check this out, I used Postman to add books and retrieve books from the book catalog. I did this for version 1.0 and 2.0 of the BooksService application.

Again, I looked in the Kibana Dashboard via Discover, clicked on button “Refresh” and then there were results found.

In the previously created visualization, I clicked on button “Refresh”. Then the booksservice containers became visible.

The log files from the other containers are filtered out by Filebeat as I expected. But what happened to the log file from the mysql container?

I checked the content of that log file.

sudo tail -n 20 /var/log/containers/mysql-64846c7974-w7mz9_nl-amis-testing_mysql-3d6ce5328e7ebcbdf012791fc87d45374afe137439ff13c28fa75ff5fc408f1d.log

With the following output:

{“log”:”2019-09-04 19:45:46 1 [Note] InnoDB: Mutexes and rw_locks use GCC atomic builtins\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.560587534Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] InnoDB: Memory barrier is not used\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.560618161Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] InnoDB: Compressed tables use zlib 1.2.11\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.560648314Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] InnoDB: Using Linux native AIO\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.56067815Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] InnoDB: Using CPU crc32 instructions\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.560819023Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] InnoDB: Initializing buffer pool, size = 128.0M\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.56157643Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] InnoDB: Completed initialization of buffer pool\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.568549963Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] InnoDB: Highest supported file format is Barracuda.\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.572777146Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] InnoDB: 128 rollback segment(s) are active.\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.581715487Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] InnoDB: Waiting for purge to start\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.582108737Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] InnoDB: 5.6.45 started; log sequence number 1625997\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.632386895Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] Server hostname (bind-address): ‘*’; port: 3306\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.632927728Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] IPv6 is available.\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.633827748Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] – ‘::’ resolves to ‘::’;\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.633866785Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] Server socket created on IP: ‘::’.\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.63390417Z”}

{“log”:”2019-09-04 19:45:46 1 [Warning] Insecure configuration for –pid-file: Location ‘/var/run/mysqld’ in the path is accessible to all OS users. Consider choosing a different directory.\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.634014095Z”}

{“log”:”2019-09-04 19:45:46 1 [Warning] ‘proxies_priv’ entry ‘@ root@mysql-64846c7974-w7mz9’ ignored in –skip-name-resolve mode.\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.634569373Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] Event Scheduler: Loaded 0 events\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.638250709Z”}

{“log”:”2019-09-04 19:45:46 1 [Note] mysqld: ready for connections.\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.638297182Z“}

{“log”:”Version: ‘5.6.45’ socket: ‘/var/run/mysqld/mysqld.sock’ port: 3306 MySQL Community Server (GPL)\n”,”stream”:”stderr”,”time”:”2019-09-04T19:45:46.638302543Z”}

So, the last time something was written to this log file, was a while ago. That’s why it didn’t turn up in the Kibana visualization for the last 15 minutes.

But, let’s check out if we can find the same information in Kibana.

In the visualization, I clicked on button “Show dates” and change the period to Sep 4, 2019 21:30 – 22:00 (taking in account the time zone) and then I clicked on button “Refresh”.

And then the mysql container became visible.

Next, I looked in the Kibana Dashboard via Discover, clicked on button “Refresh” and then there were lots of results found.

I clicked on button “Add filter” and in the field “Field” I entered kubernetes.container.name, as “Operator” I chose is and as “Value” I chose mysql. I then added another filter. In the field “Field” I entered message, as “Operator” I chose is and as “Value” I chose mysqld: ready for connections. .

I selected the first hit.

Remark:

The second hit is not visible in the listing of the content of the mysql log file, shown earlier, because there I only showed the last 20 lines!

Via a click on icon “>”, the document is expanded.

You can also choose to view the expanded document in JSON:

{

“_index”: “filebeat-7.3.1-2019.09.08-000001”,

“_type”: “_doc”,

“_id”: “OOpLEW0B1Kd52Ckb7z6X”,

“_version”: 1,

“_score”: null,

“_source”: {

“@timestamp”: “2019-09-04T19:45:46.638Z”,

“agent”: {

“hostname”: “ubuntu-xenial”,

“id”: “6fc91322-3f72-494f-9b52-12df04833853”,

“version”: “7.3.1”,

“type”: “filebeat”,

“ephemeral_id”: “e43d286c-fa3d-4adc-9ca3-d11ddb2b052d”

},

“ecs”: {

“version”: “1.0.1”

},

“host”: {

“containerized”: false,

“name”: “ubuntu-xenial”,

“hostname”: “ubuntu-xenial”,

“architecture”: “x86_64”,

“os”: {

“codename”: “Core”,

“platform”: “centos”,

“version”: “7 (Core)”,

“family”: “redhat”,

“name”: “CentOS Linux”,

“kernel”: “4.4.0-142-generic”

}

},

“stream”: “stderr”,

“message”: “2019-09-04 19:45:46 1 [Note] mysqld: ready for connections.“,

“log”: {

“offset”: 27622,

“file”: {

“path”: “/var/log/containers/mysql-64846c7974-w7mz9_nl-amis-testing_mysql-3d6ce5328e7ebcbdf012791fc87d45374afe137439ff13c28fa75ff5fc408f1d.log”

}

},

“input”: {

“type”: “container”

},

“kubernetes”: {

“namespace”: “nl-amis-testing”,

“replicaset”: {

“name”: “mysql-64846c7974”

},

“labels”: {

“environment”: “testing”,

“pod-template-hash”: “64846c7974”,

“version”: “1.0”,

“app”: “mysql”

},

“pod”: {

“uid”: “85319a39-cf4c-11e9-95ef-023e591c269a”,

“name”: “mysql-64846c7974-w7mz9”

},

“node”: {

“name”: “minikube”

},

“container”: {

“name”: “mysql”

}

}

},

“fields”: {

“@timestamp”: [

“2019-09-04T19:45:46.638Z”

],

“suricata.eve.timestamp”: [

“2019-09-04T19:45:46.638Z”

]

},

“highlight”: {

“kubernetes.container.name”: [

“@kibana-highlighted-field@mysql@/kibana-highlighted-field@”

],

“message”: [

“2019-09-04 19:45:46 1 [Note] @kibana-highlighted-field@mysqld@/kibana-highlighted-field@: @kibana-highlighted-field@ready@/kibana-highlighted-field@ @kibana-highlighted-field@for@/kibana-highlighted-field@ @kibana-highlighted-field@connections@/kibana-highlighted-field@.”

]

},

“sort”: [

1567626346638

]

}

In this expanded document you can see the following value for message:

2019-09-04 19:45:46 1 [Note] mysqld: ready for connections.

Enhancing the exported data

I wanted to try out some form of enhancing the exported log files. So, I decided to add some extra fields in order to add additional information to the output.

When using the log input to read lines from log files, you can, for example, use the following configuration options, which are supported by all inputs.

- fields

Optional fields that you can specify to add additional information to the output. For example, you might add fields that you can use for filtering log data. Fields can be scalar values, arrays, dictionaries, or any nested combination of these. By default, the fields that you specify here will be grouped under a fields sub-dictionary in the output document. To store the custom fields as top-level fields, set the fields_under_root option to true. If a duplicate field is declared in the general configuration, then its value will be overwritten by the value declared here.

[https://www.elastic.co/guide/en/beats/filebeat/current/filebeat-input-log.html#filebeat-input-log-fields]

[https://www.elastic.co/guide/en/beats/filebeat/master/filebeat-input-container.html#filebeat-input-container-fields]

- fields_under_root

If this option is set to true, the custom fields are stored as top-level fields in the output document instead of being grouped under a fields sub-dictionary. If the custom field names conflict with other field names added by Filebeat, then the custom fields overwrite the other fields.

[https://www.elastic.co/guide/en/beats/filebeat/current/filebeat-input-log.html#fields-under-root-log]

[https://www.elastic.co/guide/en/beats/filebeat/master/filebeat-input-container.html#fields-under-root-container]

You can define processors in your configuration to process events before they are sent to the configured output.

[https://www.elastic.co/guide/en/beats/filebeat/current/filtering-and-enhancing-data.html#using-processors]

The add_fields processor adds additional fields to the event. Fields can be scalar values, arrays, dictionaries, or any nested combination of these. By default, the fields that you specify will be grouped under the fields sub-dictionary in the event. To group the fields under a different sub-dictionary, use the target setting. To store the fields as top-level fields, set target: ”.

- target

(Optional) Sub-dictionary to put all fields into. Defaults to fields. - fields

Fields to be added.

[https://www.elastic.co/guide/en/beats/filebeat/current/add-fields.html]

Based on the information above, in the subdirectory configmaps/configmap-filebeat, I changed the file filebeat.yml to the following content:

filebeat.inputs:

- type: container

paths:

- /var/log/containers/mysql*.log

- /var/log/containers/booksservice*.log

fields:

my_custom_field1: 'value_of_my_custom_field1'

fields_under_root: true

processors:

- add_kubernetes_metadata:

in_cluster: true

host: ${NODE_NAME}

matchers:

- logs_path:

logs_path: "/var/log/containers/"

- add_fields:

target: my-custom-sub-dictionary1

fields:

my_custom_field2: 'value_of_my_custom_field2'

my_custom_field3: 'value_of_my_custom_field3'

- add_fields:

target: my-custom-sub-dictionary2

fields:

my_custom_field4: 'value_of_my_custom_field4'

- add_fields:

fields:

my_custom_field5: 'value_of_my_custom_field5'

# To enable hints based autodiscover, remove `filebeat.inputs` configuration and uncomment this:

#filebeat.autodiscover:

# providers:

# - type: kubernetes

# host: ${NODE_NAME}

# hints.enabled: true

# hints.default_config:

# type: container

# paths:

# - /var/log/containers/*${data.kubernetes.container.id}.log

processors:

- add_cloud_metadata:

- add_host_metadata:

cloud.id: ${ELASTIC_CLOUD_ID}

cloud.auth: ${ELASTIC_CLOUD_AUTH}

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

username: ${ELASTICSEARCH_USERNAME}

password: ${ELASTICSEARCH_PASSWORD}

So, because I made some changes, I deleted and re-installed the release of Filebeat (via Helm, the package manager for Kubernetes) and the ConfigMap.

I used Postman to retrieve books from the book catalog. I did this for version 1.0 in the TST enviroment of the BooksService application.

Again, I looked in the Kibana Dashboard via Discover, clicked on button “Refresh” and then there were results found.

I selected the first hit. Via a click on icon “>”, the document is expanded.

On the discover pane, fields with missing index-pattern-fields show hazard icons and display a warning.

To get rid of these, in the Kibana Dashboard via Management | Kibana | Index Patterns, I clicked on the icon “Refresh field list”.

Again, I looked in the Kibana Dashboard via Discover, I selected the first hit and expanded the document.

This meant that, enhancing the export data, by adding some extra fields worked.

So now it’s time to conclude this article. I tried out some of the functionality of Elastic Filebeat. Besides log aggregation (getting log information available at a centralized location), I also described how I used filtering and enhancing the exported log data with Filebeat.

In a next article I will also be using Logstash between Filebeat and ElastSearch.

The post Using Elastic Stack, Filebeat (for log aggregation) appeared first on AMIS Oracle and Java Blog.